Significant players in tech seem to have adopted AI as a religion. Well-known tech titans insist AI can replace all human jobs while companies demand employees use it (presumably to replace themselves). It will solve the world’s problems, they tell us, and will soon become sentient and lead us all to a deeper understanding of, well… everything.

Unfortunately, as with much of AI, the facts don’t support the conclusions. Here’s a sampling of how it’s going:

A NewScientist magazine article entitled, “AI hallucinations are getting worse – and they’re here to stay,” reports that despite so-called “reasoning upgrades,” AI chatbots have grown less reliable. “An OpenAI technical expert evaluating its latest LLMs showed that its 03 and 04 mini models… had significantly higher hallucination rates that the company’s previous 01 model.” For instance, in summarizing factual material, the new models had a 33% hallucination rate; the new had a 48% rate.

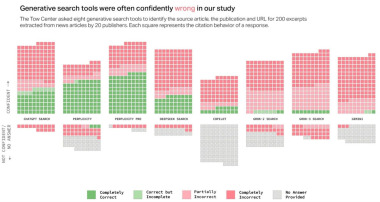

Another study from the Tow Center for Digital Journalism examined eight AI search engines. They used quoted text from the article as the search term and asked the engines to provide the source article, the news organization, and the URL. As you can see from the chart below, it didn’t go well.

The magazine of the Institute of Electrical and Electronics Engineers (IEEE) titled their piece, “Why You Can’t Trust Chatbots—Now More Than Ever: Even after language models were scaled up, they proved unreliable on simple tasks.”

After noting the infamous unreliability of LLMs like ChatGPT, the article states, “A common assumption is that scaling up the models driving these applications will improve their reliability—for instance, by increasing the amount of data they are trained on, or the number of parameters they use to process information. However, more recent and larger versions of these language models have actually become more unreliable, not less, according to a new study.”

Almost comically, the less reliable LLMs display more confidence in their ignorance. “…more recent models [are] significantly less likely to say that they don’t know an answer, or to give a reply that doesn’t answer the question. Instead, later models are more likely to confidently generate an incorrect answer.” Sounds like some old bosses of mine.

This would be amusing if the stakes weren’t so high. The article goes on to recite the business risks of relying on AI with an astonishing error rate. It’s the old ‘garbage in/garbage out.’ Rely on bad information and you make bad decisions. The very act of having to review and verify AI outputs is cited as a productivity-killer.

Finally, there’s “model collapse.” That’s what happens when AIs are trained on older AI-generated content. “Over time,” wrote Bernard Marr in Forbes, “this recursive process causes the models to drift further away from the original data distribution, losing the ability to accurately represent the world as it really is. Instead of improving, the AI starts to make mistakes that compound over generations, leading to outputs that are increasingly distorted and unreliable.”

AI even fails significantly on news summaries, which you’d think would be fairly straightforward. The BBC examined AI Assistants’ news summaries of its content. They found the following:

- 51% of all AI answers to questions about the news were judged to have significant issues of some form.

- 19% of AI answers which cited BBC content introduced factual errors – incorrect factual statements, numbers and dates.

- 13% of the quotes sourced from BBC articles were either altered from the original source or not present in the article cited.

AI may be tech’s new religion. But you might say they’re asking us to worship false idols. AI trained on hordes of data scooped from any and every source is not living up to the hype. Perhaps AI is, after all, not an end, but a means—a pattern recognition engine on steroids that has functional use within closed systems – like specific medical specialties, fixed regulatory data, or airport schedules. Alas, tech mavens may have to look elsewhere for salvation.

Leonce Gaiter – Vice-President, Content & Strategy